Introduction

Traditional model validation has become a staple of the finance industry, a well-understood and rigorously developed line of defense intended to help institutions avoid unnecessary financial losses caused by poorly performing models. Using tools such as logistic regression, time series modelling, and model benchmarking, the field has progressed steadily using traditional statistical validation techniques and tools.

Many institutions by now have already improved their traditional model validation tools by incorporating machine learning (ML) and artificial intelligence (AI) tools to identify additional features used to better predict outcomes or disasters. The ML/AI algorithms use much larger datasets and uncover hidden relationships that often lead to big improvements in predictive power. However, the datasets used to develop ML/AI tools are also often static, even if they are updated at discrete intervals. Of course, one can also break any dataset into in-time and out-of-time samples, but even that can be considered a kind of static dataset.

In real-life situations, one may need non-static models that go beyond static ML/AI. We are seeing a new wave in model validation in consumer credit that supplements the traditional and ML/AI algorithms by incorporating truly dynamic elements, intended to catch models as they might be failing, using real-time updated data and AI algorithms. These models can be sensitized to unexpected changes in data or predictions, triggering review or corrective action when necessary.

This paper addresses a case study of a consumer credit adjudication model, showcasing its performance under both traditional and ML/AI methods Followed by a discussion on examples of dynamic processes that can improve the process even more --- and how financial institutions might implement these models.

Redefining Model Validation: Beyond Traditional Methods

Traditional model validation techniques, which rely heavily on historical data and static validation methods, have proven effective under stable conditions. However, these methods can fall short in the face of rapid market changes or unprecedented events like the COVID-19 pandemic. To address these challenges first This article looks at the conventional model validation steps, then examines the integration of technology and a multi-faceted approach that incorporates real-time data, machine learning, and dynamic validation frameworks and it concludes with a case study for comparison of traditional model development and AI-Enhanced Model as well as dynamic approach.

Three primary phases of traditional model validations are as follows:

1-Conceptual Soundness Review:

1-Conceptual Soundness Review:

This stage involves a comprehensive evaluation of the model's design and construction quality. It includes scrutinizing the documentation and empirical support for the methodologies and variables chosen. This step ensures that the decision-making in the model's design is well-founded, thoroughly considered, and aligns with established research and industry best practices. Finally, by comparing the model choices with alternatives that were not chosen, important insights may be developed for implementing and updating the model.

2-Ongoing Performance Monitoring:

This phase entails continuous verification to ensure the model is implemented correctly and functioning as intended. It is crucial to assess whether changes in products, exposures, activities, clients, or market conditions require adjustments, redevelopment, or replacement of the model. Furthermore, it ensures that any extensions beyond the model's original scope are valid. Benchmarking is employed during this phase to compare the model's inputs and outputs against alternative estimates.

3-Outcome Validation:

This phase involves comparing the model's predictions to actual outcomes. One effective method is back-testing, which compares the model's forecasts to actual results over a period not used during the model's development. This analysis should be conducted at a frequency that aligns with the model's forecast horizon or performance window, allowing for early action in the event of model failure.

Embracing Change: Preparing for a Dynamic Financial Future

To ensure we are prepared to face the challenges of a rapidly evolving world, where every aspect is undergoing significant change and turning points are defining our future, we must be proactive and agile. Our commitment to create a financial world that is not only safe and sound but also better functioning than before, requires us to swiftly embrace these changes.

By being quick and adaptable, we can navigate the complexities of the new landscape and ensure that our financial systems are robust and resilient, capable of thriving in the face of uncertainty and innovation.

The new wave in model validation consists of four interrelated components that enhance the robustness and effectiveness of the validation process dynamically:

Key Components of the New Approach:

- Real-Time Data Integration

- Updated model parameters

- Related dynamic data: Warning signals

- Dynamic Validation Frameworks

- Validation updates

- Challenge models

- Identification of model weaknesses

- Machine Learning and AI

- Stress Testing and Scenario Analysis

- Expanding beyond model variables

Real-Time Data Integration:

One of the primary limitations of traditional model validation is the reliance on historical data. In a rapidly changing market, historical trends may not accurately predict future risks. Some questions to address:

- How can real-time data integration improve the accuracy and robustness of financial models?

- What challenges exist in collecting, processing, and using real-time data for model validation?

- How can we ensure data quality and reliability in real-time data streams?

- Should the dataset be dynamically expanded to provide more robust future models?

Integrating real-time data into the validation process enables models to swiftly adapt to new information, thereby enhancing their predictive power and robustness. For example, by incorporating real-time volatility indices, models can dynamically adjust their risk parameters, leading to more accurate risk assessments during periods of market turbulence. For example, a financial institution validating a credit risk model can use real-time VIX data to dynamically adjust its risk parameters. The validation process involves testing the model's performance in assessing credit risk and making lending decisions based on this real-time data.

Another example could be a financial institution managing a portfolio of corporate equity options. The institution can continuously monitor real-time CDS (Credit Default Swap) spreads for each company on which the options are traded. If the CDS spread of a particular issuer widens significantly, it indicates that higher volatility may materialize in the stock and, consequently, in the option prices. With this information, the model can promptly adjust its risk assessment and suggest appropriate risk mitigation actions, such as rebalancing the portfolio or adjusting limits.

Ensuring the continuous and accurate collection of real-time data from various sources can be complex and to address this issue utilizing APIs and automated data pipelines are important as the APIs streamline this process. On the other hand, real-time data requires efficient processing systems to handle the volume and velocity of incoming information therefore in the novel approach of model validation implementing scalable data architectures, such as cloud-based platforms, can address these needs which require high performance computing systems.

Dynamic Validation Frameworks:

The second component in our novel approach to model validation is a dynamic validation framework. Static validation frameworks are often insufficient in addressing the dynamic nature of financial markets. The use of dynamic validation frameworks that can adapt in real-time to changes in market conditions and regulatory requirements is necessary. These frameworks feature continuous monitoring, where models are continuously validated against new data to ensure they remain relevant and accurate. Additionally, adaptive algorithms adjust their parameters based on the latest data and trends, maintaining their effectiveness over time.

For instance, consider population stability testing, which assesses whether the characteristics of the population being scored by the model have significantly changed over time. In a dynamic validation framework, this testing must be continuous. If a credit risk model detects that the distribution of key variables, such as income levels or debt-to-income ratios, has shifted significantly compared to the training data, the dynamic validation framework can trigger an immediate recalibration or alert. This real-time adjustment ensures that the model continues to perform accurately and remains aligned with the current population characteristics, thereby enhancing its reliability and predictive power.

We must be vigilant about the challenges inherent in dynamic validation frameworks. Managing complexity is paramount, as these frameworks are inherently more sophisticated than static ones. This complexity requires the use of advanced tools and expertise. Ensuring compliance with evolving regulatory standards is another critical challenge. Dynamic models must remain aligned with these standards, which can frequently change, posing a significant regulatory burden.

Furthermore, it is essential to prevent models from deviating from their original objectives due to changing data and market conditions. Continuous monitoring is crucial to address this issue, but it demands substantial computational resources. By staying alert to these challenges and addressing them proactively, we can ensure that our dynamic validation frameworks remain robust, accurate, and compliant, ultimately supporting more effective decision-making and risk management.

To handle the resource demands of dynamic validation, we need to use cloud computing and scalable infrastructure. Integrating AI solutions to stay updated with regulatory changes is also essential to ensure compliance.

Machine Learning and AI:

People often discuss machine learning (ML) and artificial intelligence (AI), but few can truly articulate the transformative potential these technologies hold for our lives. To harness the power of AI and ML effectively, we must be a wise society and prudent financial institutions, laying a strong foundation for future generations to build upon without feeling threatened by these advancements. To achieve this, we must ask ourselves critical questions, as answering them is as vital as validating a model itself. For instance:

- How can we ensure the methodologies and variables used in the model are robust and align with current industry standards?

- What AI tools can we use to automate the review process? These questions drive us to consider how AI and ML can be leveraged to improve accuracy, efficiency, and innovation in our work, ensuring that we not only adapt to but also shape the future.

Machine learning (ML) and artificial intelligence (AI) offer advanced capabilities for enhancing model validation. These technologies leverage vast computational power and sophisticated algorithms to process and analyze large datasets, identify intricate patterns, and continuously refine model accuracy through iterative learning processes. Benefits include improved pattern recognition, enhanced anomaly detection, continuous learning and improvement, and greater efficiency in handling complex datasets.

Natural Language Processing (NLP):

Among many ML and AI techniques, NLP stands out for the review phase by automating and accurately identifying inconsistencies in methodology descriptions. For instance, by applying sentiment analysis to news articles, financial reports, and social media posts using NLP institutions can gauge the sentiment surrounding specific borrowers or sectors.

This can provide additional insights into potential risks that may not be captured by traditional quantitative models.

Automated Benchmarking:

The novel approach in validation stages involves automating all aspects, including benchmarking. Automated benchmarking uses ML algorithms to compare model components against a comprehensive dataset of industry standards and best practices. The objective is to identify any deviations that might require further scrutiny. This approach enhances the efficiency, accuracy, and robustness of the validation process, ensuring that financial models meet industry standards and effectively manage risk.

Pattern Recognition:

AI and ML excel at identifying subtle and complex patterns in data that traditional statistical methods might miss. For instance, in financial data, these technologies can uncover non-linear relationships between variables, enabling more accurate predictions and insights. This capability is crucial for developing models that can adapt to changing market conditions and improving their predictive power.

Anomaly Detection:

One of the strengths of ML algorithms is their ability to detect anomalies or outliers—data points that deviate significantly from the norm. In the context of financial markets, anomaly detection can help identify unusual trading activities, potential fraud, or system malfunctions early on. By flagging these anomalies promptly, organizations can mitigate risks before they escalate into significant issues.

Predictive Accuracy:

By leveraging advanced algorithms, ML models can achieve higher predictive accuracy compared to traditional models. Techniques such as gradient boosting, neural networks, and ensemble learning combine multiple models to enhance overall performance, reducing prediction errors and improving reliability.

Continuous Learning and Improvement:

Traditional models often require manual updates and recalibration to maintain their accuracy. In contrast, ML models can continuously learn from new data, automatically adjusting and improving their performance over time. This dynamic learning process ensures that the models remain relevant and effective as the underlying data evolves.

Stress Testing and Scenario Analysis

Stress testing and scenario analysis are crucial components of a robust validation framework. By simulating extreme market conditions and unexpected events, these techniques help identify potential vulnerabilities in models and ensure they can withstand adverse scenarios such as economic downturns and market crashes. Professional quants use simulation techniques to test the resilience of credit risk models, which is a critical step in the model validation process, as well as assessing the impact of sudden market crashes on portfolio risk models.

By integrating these components, financial organizations, lenders, fintech companies, and banks can build more accurate and robust models. This approach not only enhances model validation but also supports better decision-making and risk management in an increasingly dynamic financial environment.

Case Study: Credit Underwriting Model Validation Using Traditional and AI-Enhanced Methods

To demonstrate the effectiveness of these innovative validation techniques, we conducted a case study using the "Default of Credit Card Clients" dataset. (NADIA – Provided by?) The objective was to evaluate the performance of AI-enhanced credit risk models compared to traditional models. The dataset includes detailed information on credit card clients, such as various financial indicators and their default status on credit card payments, allowing for a comprehensive analysis of model efficacy.

Data Description

The dataset contains the following features:

- LIMIT_BAL: Amount of given credit.

- SEX: Gender (1=male, 2=female).

- EDUCATION: Education level.

- MARRIAGE: Marital status.

- AGE: Age in years.

- PAY_0 to PAY_6: History of past payment.

- BILL_AMT1 to BILL_AMT6: Amount of bill statement.

- PAY_AMT1 to PAY_AMT6: Amount of previous payment.

- DEFAULT PAYMENT NEXT MONTH: Whether the client defaults on the payment next month (1=yes, 0=no).

Methodology:

Two distinct approaches were employed in the model validation:

- Traditional Validation (Logistic Regression): A Logistic Regression model was trained and validated using traditional methods. Metrics such as accuracy, ROC/AUC, Gini Coefficient, and KS Score were calculated.

- AI-Enhanced Validation (XGBoost): An XGBoost model was trained and validated using advanced machine learning techniques. Similar metrics were calculated for comparison. The result of traditional and AI_Enhanced model validation comparison show a significant improvement in all the predictive metrics using the AI. Some of the key statistics and graphs are as follow.

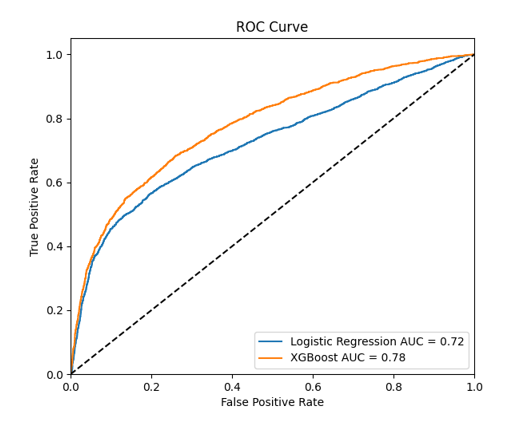

ROC/AUC:

The Area Under the ROC Curve (AUC) provides a single scalar value to compare models. A model with an AUC of 1.0 is perfect, while an AUC of 0.5 suggests no discriminative power (equivalent to random guessing).

Graph 1. ROC Curves of Traditional Credit Risk Model VS AI Enhanced Model.

The AI-enhanced model (XGBoost) achieved a higher AUC (0.78) compared to the traditional model (Logistic Regression) with an AUC of 0.72.

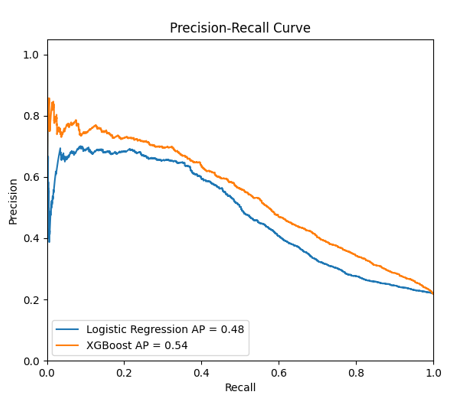

Recall

Another important statistic is recall, also known as Sensitivity or True Positive Rate (TPR), measures the ability of a model to correctly identify all positive instances in the dataset.

Graph2. Precision _Recall Curve of Traditional Model VS AI model

The AI-enhanced model (XGBoost) shows superior performance in maintaining high precision across various recall levels compared to the traditional model (Logistic Regression).

This indicates that the XGBoost model is more effective at identifying true positives while maintaining a good balance with precision. Another indicator of how AI can be helpful in our financial decision making.

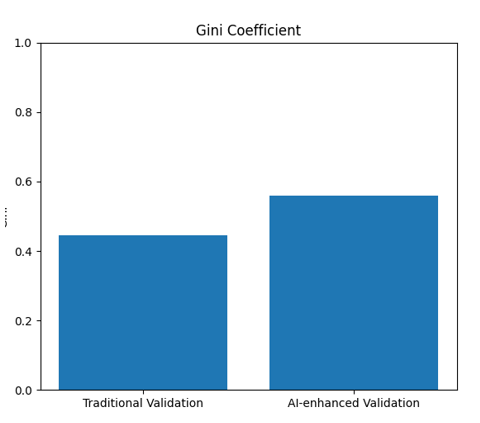

Gini Coefficient

The Gini coefficient is a measure of inequality or dispersion. In the context of model validation and performance, particularly in credit risk modeling, the Gini coefficient is used to assess the discriminatory power of a predictive model. It is closely related to the Area Under the Receiver Operating Characteristic Curve (AUC).

A higher Gini coefficient indicates that the model has a better ability to discriminate between good (non-default) and bad (default) credit risks. A model with a high Gini coefficient can effectively rank clients based on their likelihood of defaulting, which is crucial for financial institutions when making lending decisions.

In our case study, The compared Gini coefficients of the traditional Logistic Regression model and the AI-enhanced XGBoost model are 0.44 for the traditional validation model and 0.56 for AI Enhanced Validation.

Bar Chart 1. Gini Coefficient of Traditional Model vs AI Model

The higher Gini coefficient of the XGBoost model indicates that it has a superior discriminatory power compared to the Logistic Regression model meaning the AI-enhanced model is more effective in differentiating between clients who will default and those who will not, thereby providing better predictive performance.

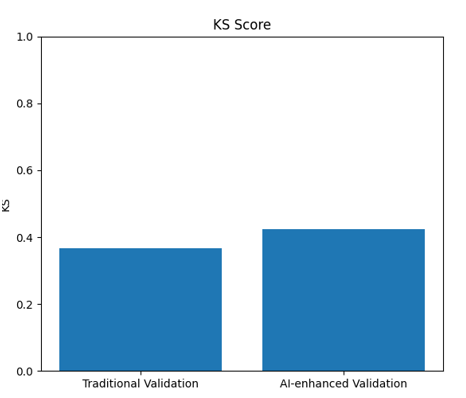

Kolmogorov-Smirnov (KS) Score

The Kolmogorov-Smirnov (KS) score is a statistic used to measure the difference between the cumulative distributions of two samples. In the context of model validation, particularly in credit risk modeling, the KS score is used to evaluate the discriminatory power of a predictive model. It measures the maximum separation between the cumulative distribution functions (CDF) of the positive (default) and negative (non-default) classes.

A higher KS score indicates that the model has a better ability to distinguish between the positive and negative classes. This is important in credit risk modeling because it shows how well the model can separate defaulters from non-defaulters.

In our case study, we compared the KS scores of the traditional Logistic Regression model and the AI-enhanced XGBoost model and the result are as follow:

Bar Chart 2: KS Score Traditional Model VS AI Model

- Logistic Regression (Traditional Validation): The KS score for this model was observed to be around 0.31.

- XGBoost (AI-enhanced Validation): The KS score for this model was higher, around 0.38. The higher KS score of the XGBoost model indicates that it has a superior ability to distinguish between default and non-default clients compared to the Logistic Regression model which shows the AI-enhanced model provides a clearer separation between the two classes, making it more reliable for identifying high-risk clients.

This case study has highlighted the comparative advantages of ML and AI techniques. Nevertheless, a more in-depth analysis indicates that transcending traditional AI requires a strategic approach to effectively utilize AI tools via dynamic model validation, as outlined in the subsequent section.

Dynamic Validation in Financial Systems

Financial institutions should consider implementing real-time data integration and continuous model updates. These updates have the ability to rebuild models with data that hasn’t been used before and to have the most recent market information – using new parameter estimates to enhance the prediction and accuracy of models. For instance, incorporating real-time macroeconomic indicators or consumer behavior data can significantly improve the performance of credit risk models by providing a more current and accurate reflection of borrowers' risk profiles.

Another essential component of dynamic validation is the use of challenger models. A challenger model runs alongside the primary model, providing a continuous benchmark for comparison. This process involves algorithms and automation to constantly monitor new parameters and identify potential improvements. By comparing the primary model with the challenger model, financial institutions can gain valuable insights into the strengths and weaknesses of their models, enabling more informed decisions about necessary adjustments. For example, a financial institution could use a machine learning-based challenger model to continuously evaluate and compare the performance of a traditional credit risk model, thereby ensuring that the primary model remains up-to-date and competitive in a rapidly changing financial environment.

Conclusion

The financial landscape is continually evolving which requires model validation practices evolve accordingly and by integrating real-time data, leveraging machine learning (ML) and artificial intelligence (AI), and implementing dynamic validation frameworks which requires implementation of real-time data integration and continuous model updates to enhance the robustness and reliability of their models. These innovative approaches not only address the limitations of traditional validation methods but also better equip institutions to navigate future uncertainties effectively.

Disclaimer:

The opinions expressed in this paper are those of the author and are intended solely for informational purposes.